Last Updated | February 28, 2025

With technology taking over healthcare, skin cancer detection is no longer confined to the walls of your doctor’s office. Many businesses are now offering innovative solutions for saving lives, such as the skin cancer app. With 1 in 5 Americans expected to develop skin cancer by age 70, as per the findings of the American Academy of Dermatology, in 2023, the urgency for early detection is now soaring high. Let’s look at how integrating this application into your offerings can accentuate patient care, drive better engagement, and the top 10 skin cancer apps leading the market.

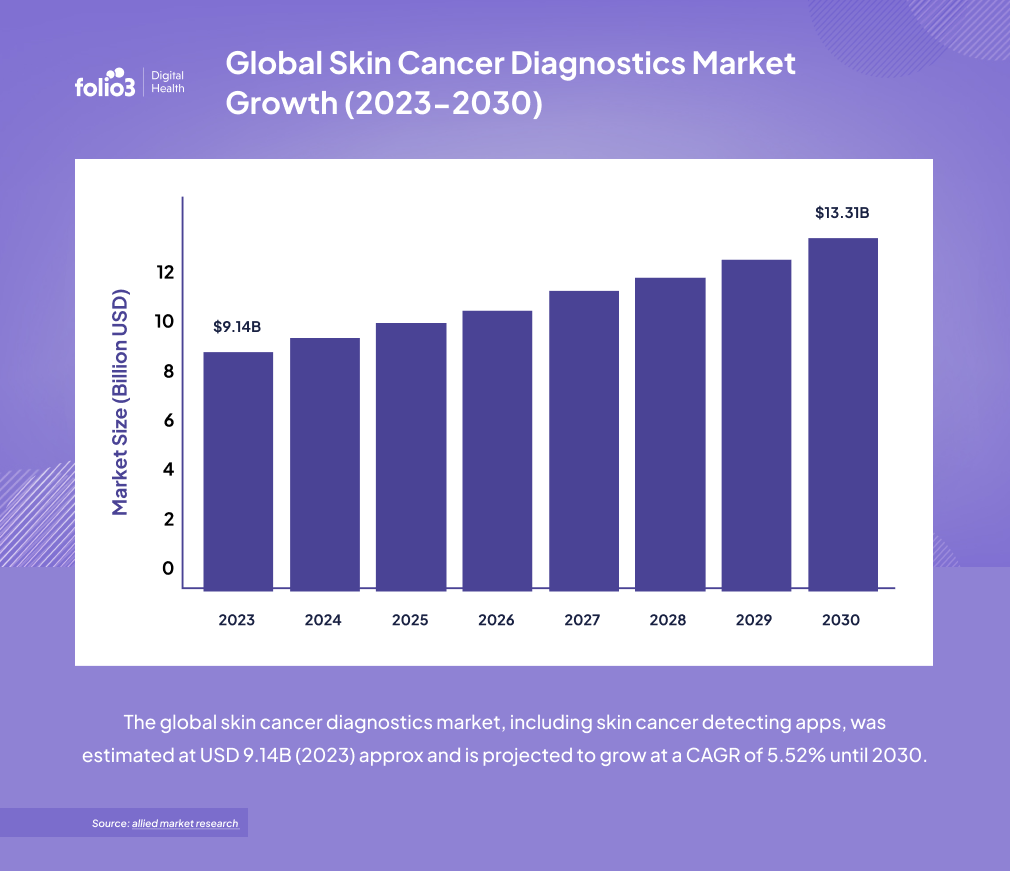

Market Insights

The global skin cancer diagnostics market, including skin cancer detecting apps, was estimated at USD 9.14B (2023) approx and is projected to grow at a CAGR of 5.52% until 2030.

Cancer is the abnormal growth of cells that take the nutrition and placement of regular healthy cells. Skin cancer is the excessive growth of abnormal cells on the skin, often developing on exposed areas to the sun. The regions of the body, such as the face, lips, ears, scalp, neck, arms, hands, and legs, are targeted mainly. However, it can also develop on other body parts such as your palms.

How Does a Skin Cancer App Work?

A skin cancer app utilizes the power of AI and high-resolution imaging to help people monitor their skin health. Users can take pictures of moles or lesions for the skin cancer detection app to analyze. They look for characteristics such as asymmetry, color, and size, providing a risk assessment (low, suspicious, or concerning).

Usually, a free skin cancer app also tracks changes over time, securely stores images, and offers personalized risk analysis. Additionally, features like self-exam reminders and telehealth integration allow users to share results with dermatologists for remote consultations.

Global Skin Cancer Diagnostics Market Growth (2023-2030)

Essential Features of a Skin Cancer App

Some of the must-have features of a skin cancer app include:

- Picture quality control ensures that the skin cancer app takes clear, high-resolution photos to accurately analyze skin lesions.

- ABCD of skin cancer educates users on the Asymmetry, Border irregularity, Color variation, and Diameter criteria, to help recognize potential signs of melanoma.

- Lesion organization allows users to monitor changes in their moles and lesions over time by comparing old and new images.

- 3D body map visualizes the body and lets users precisely locate and track the distribution of skin lesions.

- Tele-dermatology facilitates remote consultations with dermatologists to immediately receive professional assistance without in-person appointments.

- Reminders improve monitoring consistency and make early detection possible by prompting users to conduct regular skin self-exams.

- Image tagging helps users add notes and labels to images. This provides valuable context for tracking changes and observations.

Top 10 Skin Cancer Detecting Apps (Free vs. Paid)

1. SkinIO

SkinIO is a paid app for skin cancer detection that uses total body photography to track changes in your skin. Images are taken at a short ten-minute intervals. These photos are not stored on your phone but securely reviewed by a dermatologist on the other end to check if there is any cause for concern. Once done, the user will be notified whether or not any action is required.

2. MoleMapper

MoleMapper is a free skin mapping app developed by the Oregon Health & Science University. It provides tools to track moles on the skin over time. Users can photograph zones of their body, focusing in on the skin. It then maps out any moles that exist within the different zones, and the user can tap on each mole and provide measurements for them. The app keeps a log of the measurements, which they can then refer back to.

3. MoleScope

MoleScope, a paid skin cancer screening app, comes with a high-resolution camera that can be attached to your smartphone to take large and well-lit pictures of moles. Like other skin cancer apps, it has a mole mapping function, image library for easier tracking, and sends reminders to users to perform skin exams.

4. SkinVision

This paid skin cancer app provides users with an in-app camera that photographs and assesses moles, providing one of three risk assessments within 30 seconds. The assessment will determine whether it is low risk, low risk with symptoms to track, or high risk. SkinVision also allows you to catalog the photos and their assessments for tracking their development over time.

5. Miiskin

Miiskin fereemium skin cancer app focuses on whole-body skin self-examination and mole tracking. It allows users to take full-body pictures and close-up images. The app’s Body Maps feature allows this skin checker app to document the location of moles. Its comparison tool highlights changes in skin lesions over time. Miiskin offers a premium service that lets users connect with dermatologists for remote consultations.

6. DermCheck

DermCheck skin cancer app facilitates remote consultations with dermatologists. Users can upload images of their skin for the board-certified dermatologist to review and provide personalized feedback. This skin cancer identifier app is designed to provide quick access to professional dermatological opinions.

7. First Derm

First Derm is a paid app for skin cancer detection that allows users to submit their photos and a description of skin conditions to board-certified dermatologists for a diagnosis and treatment. It focuses on quick turnaround times and provides users with convenient, confidential access to experts.

8. UMSkinCheck

This is the best free skin cancer app developed by the University of Michigan. It helps users perform thorough skin self-exams by providing step-by-step instructions and visual aids to guide them through the process. Users can document their findings and track changes in their skin over time.

9. DermAssist (Google Health)

DermAssist is an AI skin cancer detection app that identifies potential skin issues by analyzing their photos. The tool provides a list of possible conditions and information about them but cannot be taken as a substitute for an expert diagnosis.

10. Aysa

Aysa is an AI-powered skin cancer screening app that checks symptoms of skin conditions to deliver insights. Users describe their symptoms, and Aysa provides potential causes and recommendations for further action. Although this application is not exclusively for skin cancer, it can help users identify concerning skin changes and tell when to visit a dermatologist.

Benefits of Using a Skin Cancer App

For Business Owners

- Revenue Generation: Monetization through app sales, subscriptions, or in-app purchases. Plus partnerships with healthcare providers, insurers, or skincare brands for affiliate marketing.

- Market Differentiation: A cutting-edge health tech solution can set a business apart in a competitive field.

- Data Insights: Collecting anonymized user data helps identify trends in skin health that are valuable for research or marketing. This data can improve app features and user experience.

For Healthcare Providers

- Early Detection: Apps with AI-powered skin analysis can help identify potential skin cancer cases earlier, improving patient outcomes.

- Reduced Workload: Triage patients more efficiently by prioritizing high-risk cases flagged by the app and reduces unnecessary visits for benign conditions.

- Patient Engagement: Encourage patients to take a proactive role in monitoring their skin health.

- Remote Monitoring: Enable telehealth consultations by allowing patients to upload images and share results with their dermatologist.

For End Users (Patients or Individuals)

- Convenience: Helps easily monitor skin changes from home without visiting a dermatologist. Allows 24/7 accessibility for self-checks and reminders.

- Early Detection: Identifies suspicious moles or lesions in the early stages, increasing the chances of successful treatment through AI-powered analysis.

- Education and Awareness: Learn about skin cancer risk factors, prevention tips, and the importance of regular skin checks and receive personalized recommendations based on skin type and risk profile.

Top 10 Features To Add in a Skin Cancer App

How to Develop a Skin Cancer App: The Step-by-Step Process

Design and Planning

This initial phase defines the skin cancer application’s purpose and target audience. Careful planning should include essential features like lesion classification and align with the user’s needs. A well-designed interface facilitates easy navigation and image capturing, which are essential for user adoption and accurate data input.

Data Collection and Preparation

The accuracy of any machine learning model relies heavily on the quality and variety of the data it trains on. Gathering a large dataset of dermoscopic images helps a lot. Data augmentation techniques address any imbalances or limitations so that the model is trained on multiple samples of skin lesions and makes it capable of accurately detecting various types of skin cancer.

Machine Learning Model Development

Selecting an appropriate Convolutional Neural Network (CNN) architecture, such as Inception v3 or ResNet50v2, is a must for effective image analysis. Transfer learning then trains the model on the prepared dataset, leveraging pre-existing knowledge as well. The model’s performance is evaluated and fine-tuned for accuracy in detecting skin cancers like melanoma, basal cell carcinoma, and squamous cell carcinoma.

Model Conversion and Integration

To enable efficient real-time analysis on mobile devices, the trained machine learning model must be converted into a mobile-friendly format like Core ML for iOS or TensorFlow Lite for Android. This allows the model to run smoothly on smartphones, making way for rapid skin lesion analysis captured by the device’s camera.

Mobile App Development

Developers build the mobile application using platform-specific development tools like Xcode for iOS and Android Studio for Android. Integrating the camera module enables users to easily get images of skin lesions, while the user interface clearly displays analysis results and risk assessments. This phase focuses on creating a functional, intuitive app that effectively delivers the AI-powered detection capabilities.

Testing and Refinement

Thorough testing across various devices guarantees the app’s accuracy and reliability. User feedback and performance metrics help refine the skin cancer app, addressing issues related to image quality, classification accuracy, or user experience. This iterative approach supports in meeting the user expectations and delivers consistent performance.

Regulatory Compliance and Ethical Considerations

Adhering to healthcare regulations, such as HIPAA and GDPR, assures the user’s privacy and data security. Implementing secure data handling practices, mentioning that the app for skin cancer detection is a supportive tool, rather than an alternative for professional medical advice, is essential. This step helps to build trust and ensure responsible use of the app.

Deployment and Maintenance

The final step is releasing the skin cancer app on platforms like the App Store and Google Play to make it accessible to a broad audience. Regular updates address bugs, improve compatibility with new devices and operating systems. On the other hand, continuously retraining the model with new data maintains and enhances the application’s accuracy over time.

How Much Does it Cost to Develop a Skin Cancer App?

App Type |

Features |

Estimated Cost USD |

Basic App |

Mole tracking, photo storage, reminders | 20,000−50,000 |

Intermediate App |

AI-based analysis, risk assessment, user-friendly UI/UX | 50,000−150,000 |

Advanced App |

Dermatologist reviews, FDA/CE compliance, advanced AI, cloud integration | 150,000−500,000+ |

Factors Affecting The Cost of Developing a Skin Cancer App

Features and App Complexity

- AI-based diagnostics for image analysis increases development costs.

- Telemedicine integration allows real-time consultations and messaging, increasing the app’s complexity.

- More features like e-prescriptions or patient records mean higher development expenses.

Regulatory Compliance and Security

- Data protection (HIPAA, GDPR) requires robust security measures.

- Certification processes add significant time and cost.

Platform and Technology

- Multiple platform selection (iOS, Android, Web) increases development workload, team size, and cost.

- Development approaches, such as native development, offer performance but are more expensive.

User Experience and Design

- Intuitive UI/UX design enhances user engagement but requires skilled designers.

Development Team and Expertise

- A multidisciplinary team with specialized skills increases project costs; more head count, more amount of capital required.

- Experts with specialized skills like AI, Healthcare Regulations, ask for higher salaries.

How to Choose The Best Skin Cancer App?

1. Medical Accuracy and Validation

- FDA Approval or CE Marking: Look for skin care apps that are approved by regulatory bodies such as the FDA (U.S.) or have a CE mark (Europe). This authenticates that the application meets certain safety and efficacy standards.

- Clinical Validation: Cross-check if the skin cancer app has been tested in clinical studies and published in reviewed journals. Apps backed by scientific research are more reliable and accurate.

- AI and Algorithm Quality: Ensure the app uses advanced AI or machine learning algorithms trained on all skin types and conditions.

2. Features and Functionality

- Image Analysis: The app should allow high-quality image uploads for accurate analysis.

- Risk Assessment: It should provide a clear risk assessment (e.g., low, medium, high) for potential skin cancer.

- Tracking and Monitoring: Choose applications that let you track changes in moles or lesions over time.

3. Ease of Use

- User Interface: The skin cancer app should be easy to navigate.

- Image Capture Guidance: The mole checker app should provide instructions on how to take clear, well-lit photos.

- Accessibility: Check whether the app is compatible with your device (iOS, Android) and supports multiple languages if need be.

4. Privacy and Security

- Data Protection: Check if the app’s privacy policy secures your personal health data and shares it without consent.

- Encryption: Look for apps that use encryption to protect your images and information.

5. Professional Involvement

- Dermatologist Review: A few skin cancer apps offer the option to have your images reviewed by a board-certified dermatologist for an additional fee.

- Integration with Healthcare Providers: Apps that allow you to share results with your doctor can be more useful for follow-up care.

Connect with Folio3 Digital Health for an AI-Driven Skin Cancer Detecting App

The demand for AI-powered healthcare solutions is rising, and the skin cancer detection app is not behind. With early diagnosis playing a role in saving lives, the opportunity to develop a skin cancer detection app has never been better. Partner with Folio3 Digital Health to leverage deep expertise in healthtech to develop your AI-driven skin cancer detection app. Our solutions are HIPAA-compliant and built with FHIR and HL7 interoperability standards, ensuring secure and seamless data exchange across healthcare systems. We specialize in fitness app development services, custom healthcare app development, and much more!

Conclusion

The fight against skin cancer revolves around smarter, faster detection that saves lives. A well-designed skin cancer app does not replace dermatologists but supports them with AI-driven insights for accurate and early detection!

Frequently Asked Questions

What are the top five skin cancer detecting apps?

MoleMapper, MoleScope, SkinVision, Miiskin, and DermaSensor.

Is the app a replacement for a dermatologist/oncologist?

No. The app is designed as an educational tool to help users assess their skin but should not replace professional medical advice.

What type of skin conditions can the app detect?

The app analyzes moles and lesions for potential indicators of melanoma, basal cell carcinoma, and squamous cell carcinoma. It may also detect benign skin irregularities.

Can the app detect non-melanoma skin cancer?

Yes, some apps can help detect non-melanoma skin cancer, but they are not a substitute for seeing a dermatologist.

About the Author

Naqqash Khan

As a seasoned .NET Developer, I am dedicated to creating innovative digital health solutions that improve patient outcomes and streamline healthcare processes. Working in the Digital Health division of Folio3, I have a wealth of experience utilizing the latest technologies to craft highly scalable, HIPAA-compliant, and secure software systems. My experience includes developing web and mobile applications, implementing RESTful APIs, and utilizing cloud computing technologies such as AWS/Azure for scalable and secure data storage and processing. If you're looking for a professional who can turn your digital health vision into a reality, connect with me to discuss how we can work together to revolutionize healthcare through technology.